Ground Control Points (GCPs)

- What is a ground control point?

- What are GCPs used for?

- What will GCPs be used for in this project?

Methods

In a new project in Pix4D, the images from the first flight are brought in, and the camera's shutter mode is changed to Linear Rolling Shutter. The field collected GCP coordinates located within a text are imported to the project after this. The settings must be set to Y, X, Z as the text file is formated this way. Once this is done, the Initial Processing can be run.

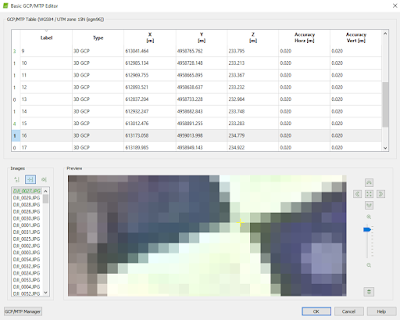

The DJI Platform the images were collected with has a problem arise which produces significant issues with altitude of the GCPs. The GCPs have to be edited within either the Ray Cloud Editor or the Basic Editor to correct the errors brought on by this (Figure 1).

|

| Figure 1: The editor options within Pix4D must be consulted when using a DJI Platform. |

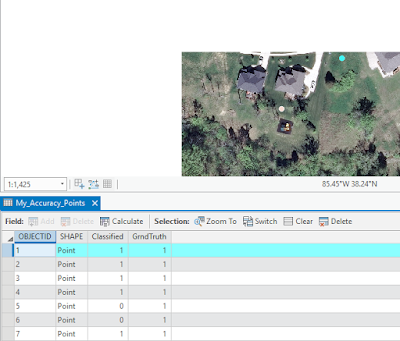

Once the Editor of choice is selected, the field notes of where each GCP is located must be consulted to place the GCP in the correct place (Figure 2). The Basic Editor is used in this project. The location from the notes is zoomed into in the Basic Editor to find the proper GCP (Figure 2). When it is located, the x is moved to the very center to ensure accuracy.

|

| Figure 2: The overview of the Basic Editor. The yellow x marks where the GCPs were imported to. |

After the GCPs are corrected, the project can be reoptimized. Once this is done, the GCP Manager can be utilized to access the Ray Cloud Editor. The same process of zooming into the GCP and moving the yellow x from the Basic Editor is done within the Ray Cloud Editor. For each GCP, this is done for at least 5 images, and then apply this to adjust the GCPs. This is done for all of the GCPs for this flight. After this, the second and third steps of the processing are run to complete the project. Once the first flights images are processed, the same process of these steps are completed for the second flight too.

Figure 3 shows the first step of the next process. A new project is created which merges the two flights from Litchfield Mine together. The GCPs are imported to tie the project down again.

|

| Figure 3: This figure displays the selection |

After the Initial Processing is ran, the Basic Editor is used again to ensure the GCP accuracy (Figure 4). This step is important when merging two projects together to make sure that they stitch together properly.

|

| Figure 4: The Basic Editor correcting the GCPs of the two projects when merged. |

|

| Figure 5: The two projects stitched together displayed with the triangles computed. |

Results

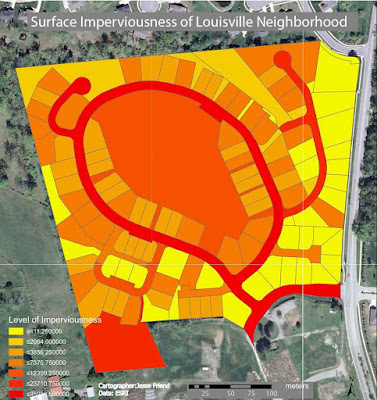

The results of the processing with GCPs are clear compared to projects that don't include GCPs.

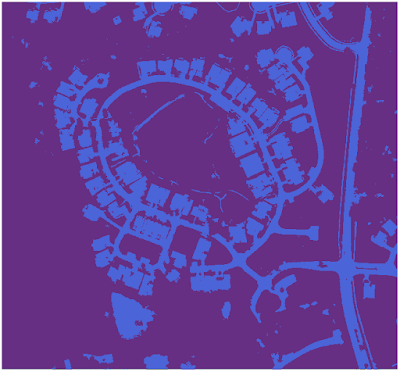

Figure 6 shows the merged DSM of Litchfield Mine, compared to Figure 7 which did not include GCPs. The results from the GCPs reduce the noise of the image. Figure 6's results show a higher visual accuracy compared to Figure 7.

|

| Figure 6: DSM completed from merging the two projects together. |

|

| Figure 7: Results of processing Flight 1 with no GCPs. |

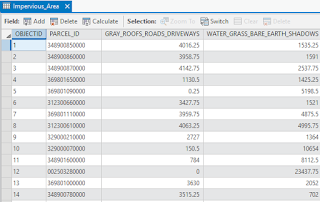

Figure 8 displays the results of the merged orthomosaics. The variations in altitude are much more visible in the merged project compared to the second flight (Flight 9). Although Figure 8 includes both flights in the project, the two can still be compared. When compared as layers, the project which includes GCPs is clearly more accurate.

|

| Figure 8: Orthomosaic of the two projects merged together. |

|

| Figure 9: Litchfield Mine Flight 2 displays the results of not using GCPs. |